Quick Summary

ChatGPT Health adds a dedicated health space inside ChatGPT where people can ask health and wellness questions and, in some cases, connect records or wellness apps for more personalized answers. Even though the launch examples lean medical and fitness, behavioral health and addiction topics will surface because mental health is part of health, many people already ask AI chatbots for mental health guidance, and substance use often overlaps with co-occurring mental health symptoms. For treatment providers, this shifts the patient research loop: more callers will arrive “AI-informed,” using your website to verify what they heard and your admissions team to confirm fit and next steps. Programs that explain levels of care, co-occurring treatment, safety boundaries, and the admissions process in clear language will earn trust faster and convert more qualified calls.

Key Takeaways

- ChatGPT Health will influence behavioral health and addiction decisions because users already seek mental health advice from AI tools, and co-occurring conditions are common.

- Expect more “AI-informed” callers who want clear answers on fit, level of care (IOP vs PHP vs residential), and what happens next.

- Update high-intent pages to act as a verification layer: program fit, co-occurring care, first-week expectations, and insurance verification.

- Train admissions teams to handle “ChatGPT told me…” questions with calm boundaries and a fast path to assessment.

OpenAI launched ChatGPT Health on January 7, 2026. It is a dedicated Health space inside ChatGPT where people can ask health and wellness questions and optionally connect medical records and wellness apps so answers can be grounded in their own context. OpenAI positions it as support for care, not a replacement for clinicians, and says Health conversations are kept separate and are not used to train foundation models.

At first glance, the product reads “medical, wellness, and fitness.” That is fair. The launch examples focus on test results, appointment prep, nutrition, workouts, and insurance tradeoffs. But it would be a mistake for behavioral health and addiction treatment providers to assume this stops at physical health. Behavioral health and addiction topics will show up inside ChatGPT Health because that is already how people use AI chatbots: to ask about mood, anxiety, distress, and coping, especially when they want privacy. Research in JAMA Network Open found meaningful use of generative AI for mental health advice among U.S. adolescents and young adults.

Mental health is not separate from health in the eyes of public health authorities. The World Health Organization treats mental health as an integral part of health. For addiction treatment programs, the overlap is even tighter. SAMHSA and NIMH both describe how mental health disorders and substance use disorders often co-occur, and why evaluating them together matters.

This article explains what changes for patient behavior, where the risk lives, and what providers should update across content, admissions, and patient education so more people reach care faster, with less confusion.

What OpenAI Launched and What It Is Not

ChatGPT Health is a Health space inside ChatGPT for health and wellness conversations. Users can connect sources such as Apple Health and other wellness apps, and in the U.S., can optionally connect medical records through a partner network.

OpenAI also states clear boundaries:

- Health is designed to support care and is not intended for diagnosis or treatment.

- Health chats and related data are not used to train foundation models.

- Health is separated from the rest of ChatGPT, with health-specific memory and controls, and OpenAI says Health information does not flow back into non-Health chats.

- OpenAI’s own Help Center states ChatGPT Health is “for personal wellness” and that HIPAA does not apply to consumer health products like ChatGPT Health.

For independent reporting and context, you can read summaries from Reuters and TIME.

Will ChatGPT Health Include Mental Health and Addiction Topics?

OpenAI’s launch post does not list “behavioral health” as a separate category, but the product is framed as health and wellness support, and user behavior will naturally expand into mental health and substance use topics.

1) Public health authorities define mental health as part of health

The World Health Organization explicitly states that mental health is an integral part of health, and uses the line “there is no health without mental health.” You can cite that directly using WHO’s mental health topic page.

If a product is designed for “health and wellness,” it is naturally adjacent to mental health questions because mental health is part of the definition of health used by major global authorities. That does not mean the tool is a therapist. It means the user’s intent will include mental health, whether providers like it or not.

2) People already use generative AI chatbots for mental health advice at scale

A nationally representative survey published in JAMA Network Open (McBain et al., 2025) reports on adolescents and young adults using generative AI for mental health advice when feeling sad, angry, or nervous. You can reference the study directly here: Use of Generative AI for Mental Health Advice Among US Adolescents and Young Adults.

RAND also summarized the same finding in a press release format that is easy for business readers: One in Eight Adolescents and Young Adults Use AI Chatbots for Mental Health Advice.

So even if ChatGPT Health’s marketing examples lean physical, user behavior does not.

3) Addiction and behavioral health are clinically intertwined

For addiction treatment brands, the overlap is not theoretical. SAMHSA’s co-occurring disorders page explains how mental health problems and substance use disorders often occur together and why. You can cite: Mental Health and Substance Use Co-Occurring Disorders.

For a clinical framing, NIMH also covers this overlap: Finding Help for Co-Occurring Substance Use and Mental Disorders.

Bottom line: ChatGPT Health will not be “limited to mental health and addiction,” and it is not positioned as treatment. But mental health and substance use topics will show up inside it because that is what users already ask AI for, and because those topics are inseparable from health for a large share of patients seeking care.

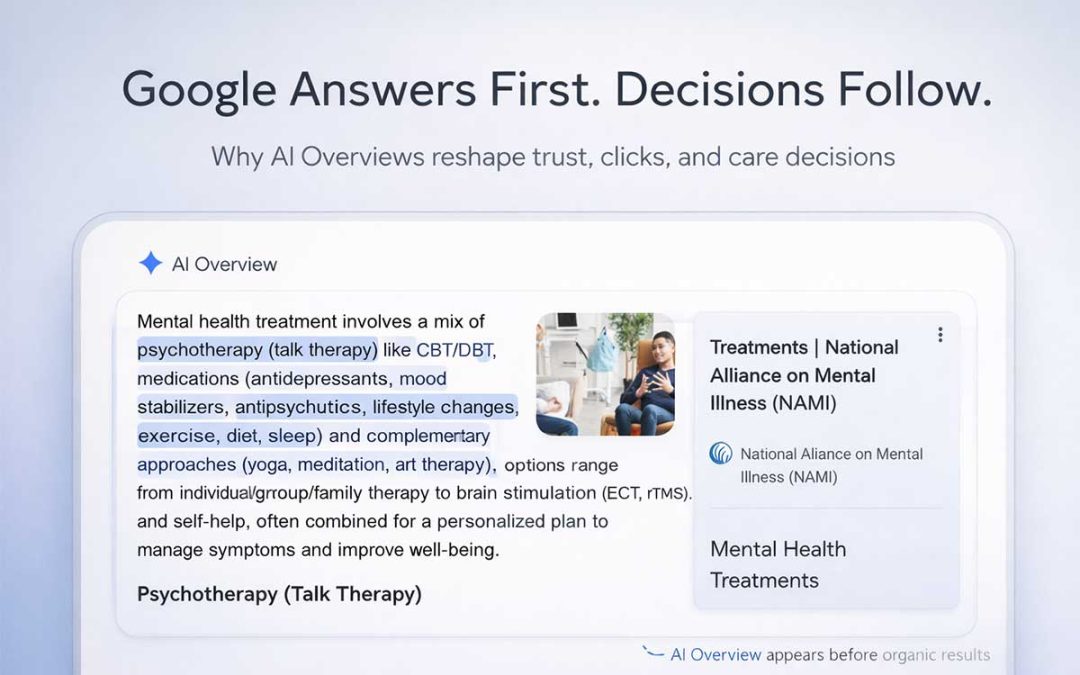

The New Patient Journey: AI First, Verification Second, Intake Third

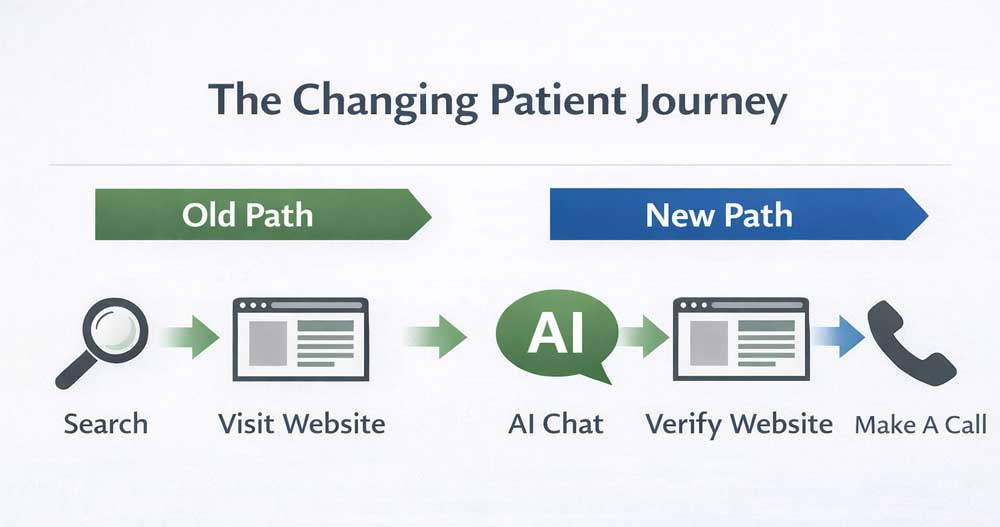

AI is changing how users search for your answers. For years, the typical path looked like:

Google search → a handful of websites → one call

ChatGPT Health adds a new first step that compresses the research phase. Patients already use ChatGPT to make sense of health information and prepare for medical conversations, and Health makes that behavior easier and more centralized.

ChatGPT Health conversation → provider website verification → intake call

This matters because many behavioral health and addiction patients research privately for longer before contacting a program. A tool that supports private research tends to increase pre-call preparation, which means the first admissions conversation starts later in the decision process.

What providers will notice in the coming months

Admissions teams should expect more callers who say:

- “I think I need PHP, not IOP, because…”

- “I have trauma symptoms and substance use. Does your program treat both?”

- “What therapies do you use, and how do you measure progress?”

- “What is the first week like, and what do you do if symptoms spike?”

These high-quality questions are not a problem; they are an opportunity for you and your team. Providers who answer clearly, clarify boundaries, and offer the next step will earn trust faster.

The Upside for Patients: Clearer Questions, Less Shame, Faster Movement

In behavioral health and addiction treatment, the first barrier is often emotional: fear, shame, or uncertainty about what care actually looks like. Many people do private research because it feels safer than telling a human what is going on.

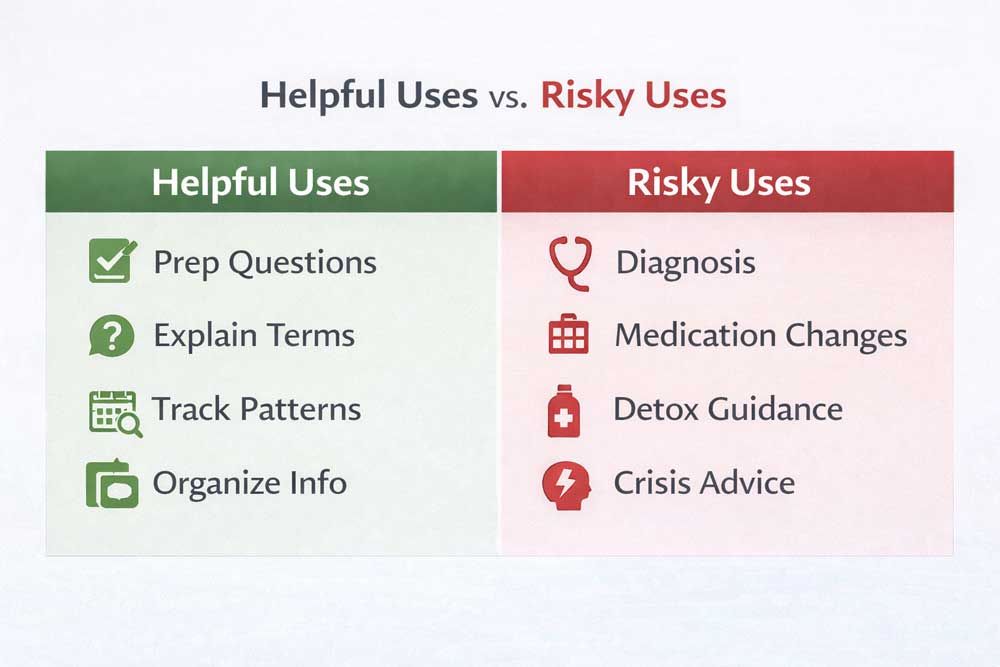

A tool like ChatGPT Health encourages:

- Private rehearsal: “How do I describe my symptoms to a clinician?”

- Better appointment and intake prep: building a list of questions and summarizing timelines

- Pattern thinking: connecting sleep, mood, substance use, and stress over time (especially when a user is tracking in apps)

For many people, the first real benefit is emotional: they feel less alone and more articulate. That often translates into a more productive assessment and a higher chance they follow through.

The Downside: Confident-Sounding Answers Can Still Be Wrong or Unsafe

The core risk with consumer AI in health is not intent. It is error and overconfidence, especially when a user’s input is incomplete.

That concern has been raised in mainstream reporting around ChatGPT Health, including questions about safety frameworks and the lack of medical device regulation in some markets. See, for example, The Guardian’s reporting and additional analysis such as TIME’s overview of privacy and accuracy concerns

For behavioral health and addiction, the risk is amplified because:

- Symptoms overlap (withdrawal, trauma, anxiety, sleep loss)

- Safety thresholds matter (crisis, psychosis risk, detox risk)

- People can be tempted to self-manage instead of getting evaluated

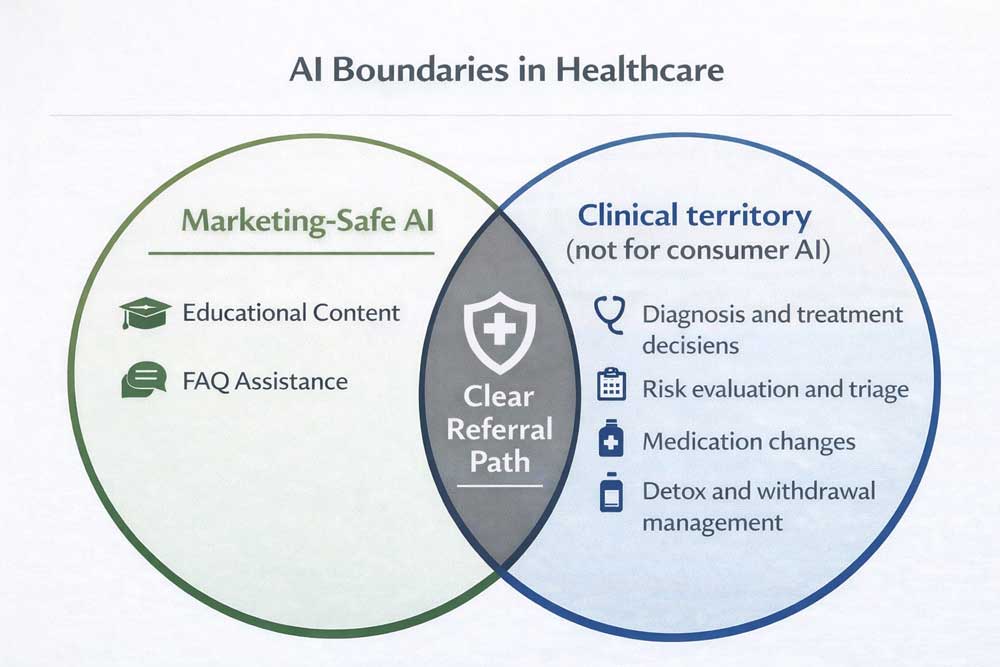

Providers can reduce harm by adding a simple boundary statement on key pages and reinforcing it on calls: AI can help organize questions, but it cannot evaluate risk or determine a level of care.

Privacy and Compliance: Set Expectations Clearly

OpenAI emphasizes encryption, isolation, and non-training for Health conversations. At the same time, OpenAI’s Help Center explicitly states ChatGPT Health is a consumer product and HIPAA does not apply to it.

That gap creates a messaging challenge: patients may treat “health space” as “medical privacy,” and providers will need to reset expectations gently.

Patients may not understand that difference. Your messaging should be calm and simple:

- Encourage patients to avoid sharing highly sensitive information in consumer tools.

- Invite them to share details during an assessment in your protected clinical workflow.

For providers and marketers, the simplest operational rule is:

Do not instruct prospective patients to paste protected or highly sensitive details into consumer AI tools.

If you use AI internally, use a HIPAA-appropriate workflow and vendor setup.

If you want to dig deeper into how consumer health data is regulated outside HIPAA, the Federal Trade Commission has helpful guidance. You can review the FTC’s overview of consumer health information beyond HIPAA and the Health Breach Notification Rule.

What Providers Should Update Now: Content, CRO, and Admissions

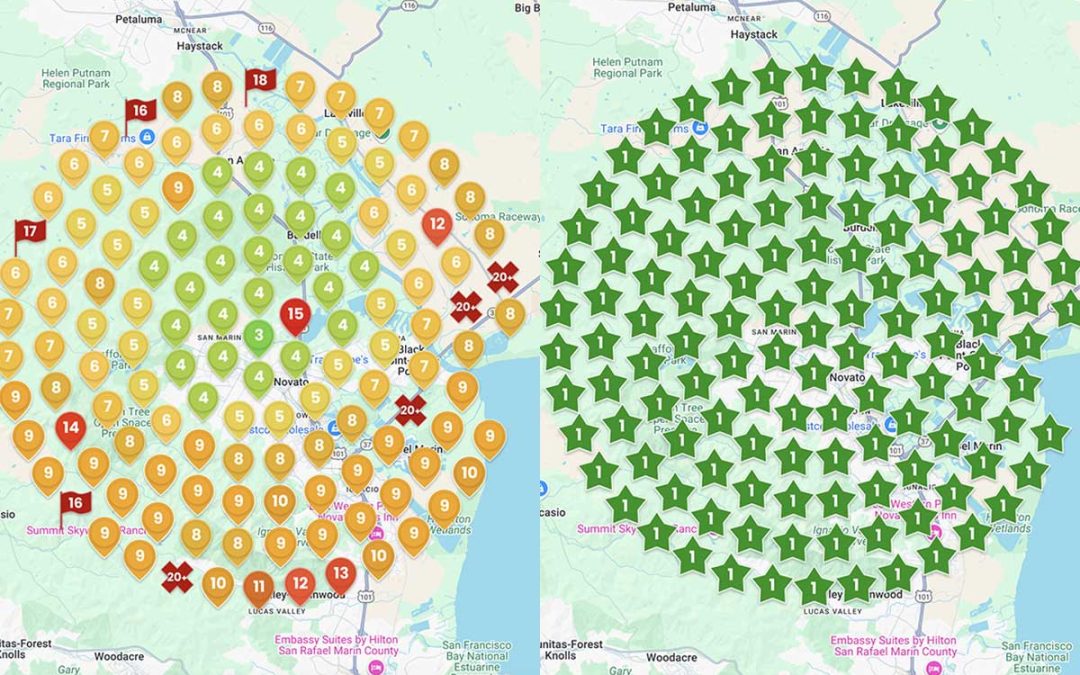

1) Make the website a “verification layer,” not a brochure

Patients will use your site to confirm what AI suggested. Pages that consistently win:

- Levels of care comparisons (IOP vs PHP vs residential)

- Dual diagnosis clarity (what you treat, how you evaluate it)

- “What happens next” after the first call

- What the first week looks like

- Insurance verification, explained simply.

2) Add a short “AI-era FAQ” to high-intent pages

Examples:

- “Can AI tell me what level of care I need?”

- “What should I bring to an assessment call?”

- “How do you treat co-occurring anxiety, trauma, or depression?”

- “How do you handle medication questions?”

- “What if I’m worried about withdrawal or safety?”

3) Train admissions teams to handle “ChatGPT told me…” without friction

A simple pattern works:

- Validate the question

- Clarify what the program can evaluate

- Move to the next step (assessment, benefits check, clinician call)

What to Watch Next in 2026

Three things will shape how big this becomes:

- Rollout details and feature expansion for Health over time

- More scrutiny from media and regulators about consumer AI health safety and accountability

- The public’s evolving expectations around privacy, even when HIPAA does not apply

The Bottom Line for Behavioral Health and Addiction Providers

ChatGPT Health is not treatment, and OpenAI does not position it that way. Still, it is becoming a new front door for health questions. Behavioral health and addiction topics will show up there because mental health is part of health, people already use AI chatbots for mental health guidance, and substance use and mental health often overlap.

That shift changes what happens before the first call. Prospective patients and families will arrive with more “AI-informed” questions, stronger expectations for clarity, and less patience for vague pages that do not explain fit, level of care, and next steps.

Providers who communicate clearly will earn trust faster and convert more qualified calls. Providers who stay vague will see more confusion, more objections, and more missed opportunities to connect people to care.

Want help adapting your website and intake flow to this new research pattern?

ProDigital Healthcare helps treatment centers convert high-intent demand into qualified calls through addiction treatment marketing and behavioral and mental healthcare marketing, with SEO, conversion-focused pages, admissions-aligned content, and a compliant paid media strategy. If you want a fast, practical roadmap, request an AI-era content and conversion audit, and we will map the highest-impact updates for your programs, your markets, and your goals. Contact ProDigital Healthcare to map the highest-impact updates for your programs, your markets, and your goals.